Claude Opus 4.6 vs GPT-5.3 Codex: AI Showdown ⚔️

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

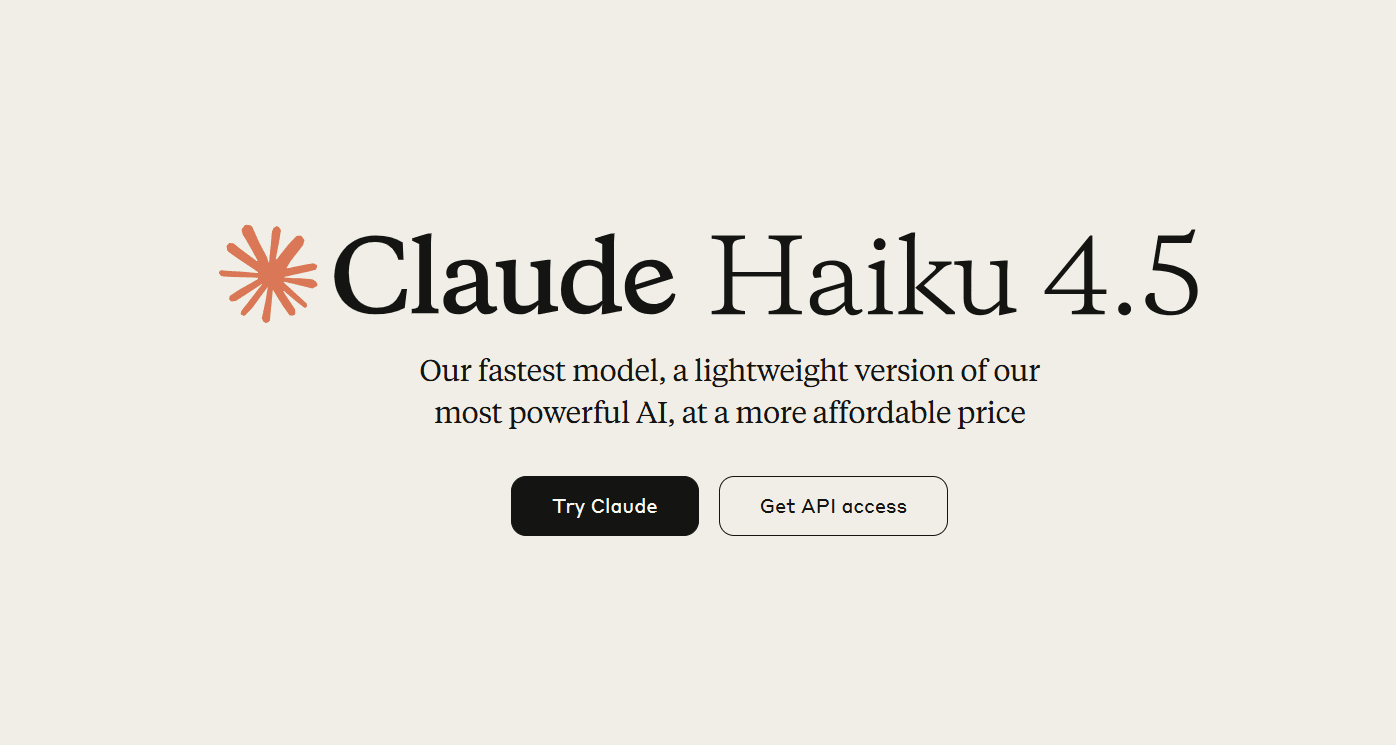

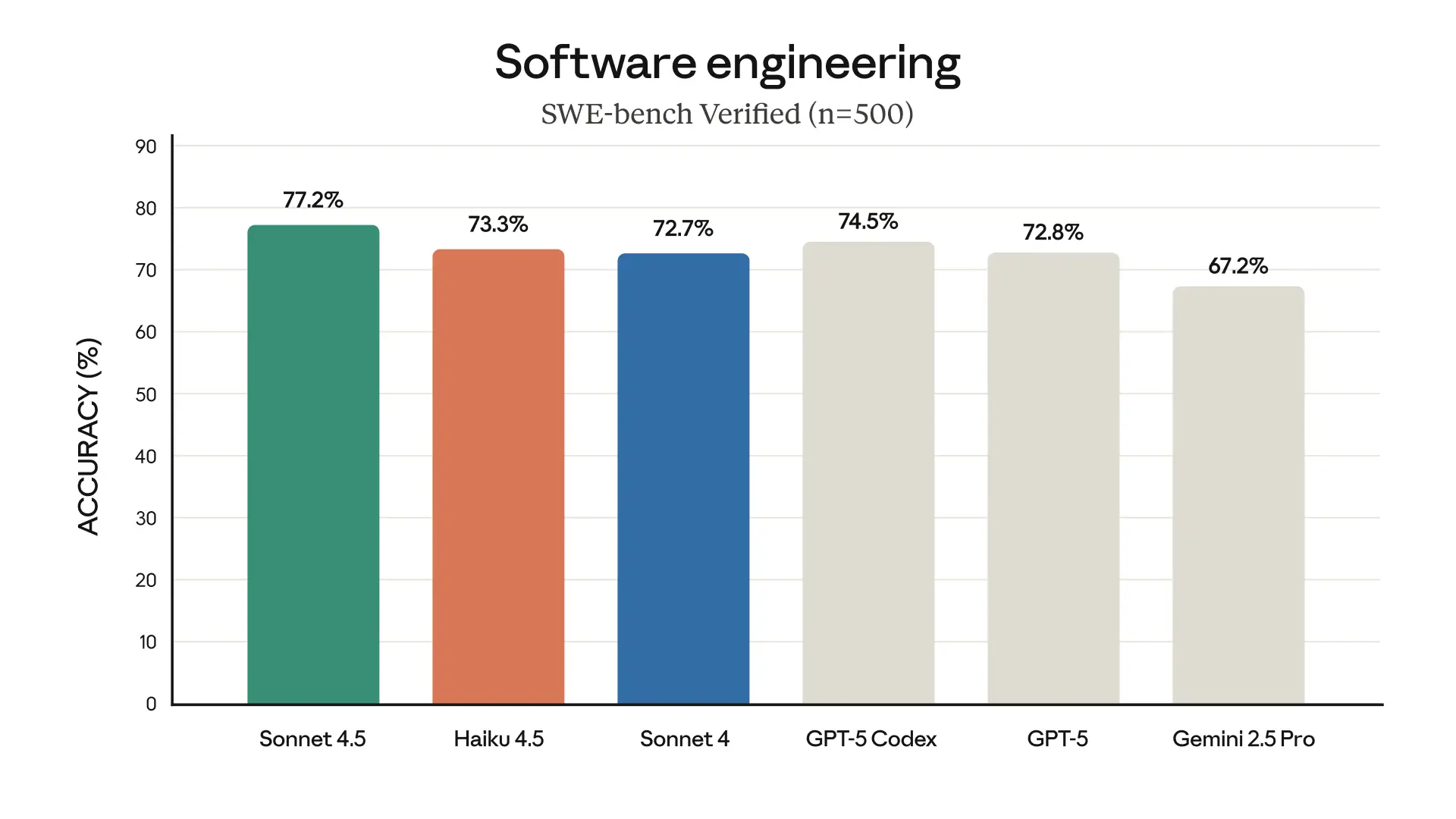

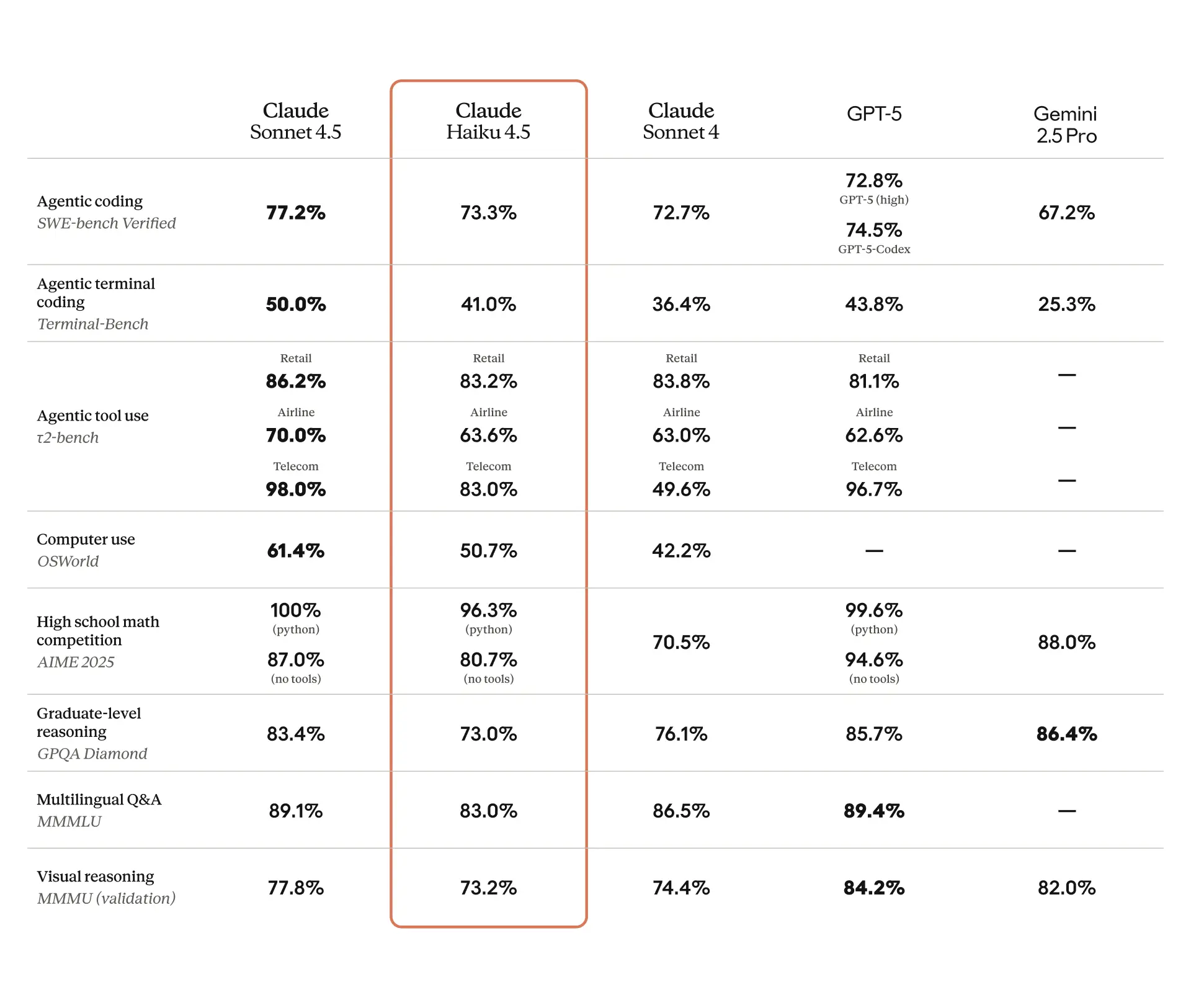

Claude Haiku 4.5 offers near-frontier AI performance at double the speed and one-third the cost. Discover how it changes the game.

You needed an AI model to generate clean, working code snippets during your live product demo. Every millisecond counted. You fired up Claude Haiku 4.5. It didn’t just keep up — it flew past your expectations. Faster than Sonnet. Cheaper than GPT. Surprisingly capable. And in that moment, you realized: smart doesn’t have to mean slow.

Claude Haiku 4.5 is Anthropic’s newest lightweight language model optimized for speed, cost-efficiency, and high utility. It delivers near-frontier results on common benchmarks while drastically reducing latency and cost.

Designed to power everyday AI tasks like coding, summarization, or data analysis, Haiku 4.5 performs with shocking agility.

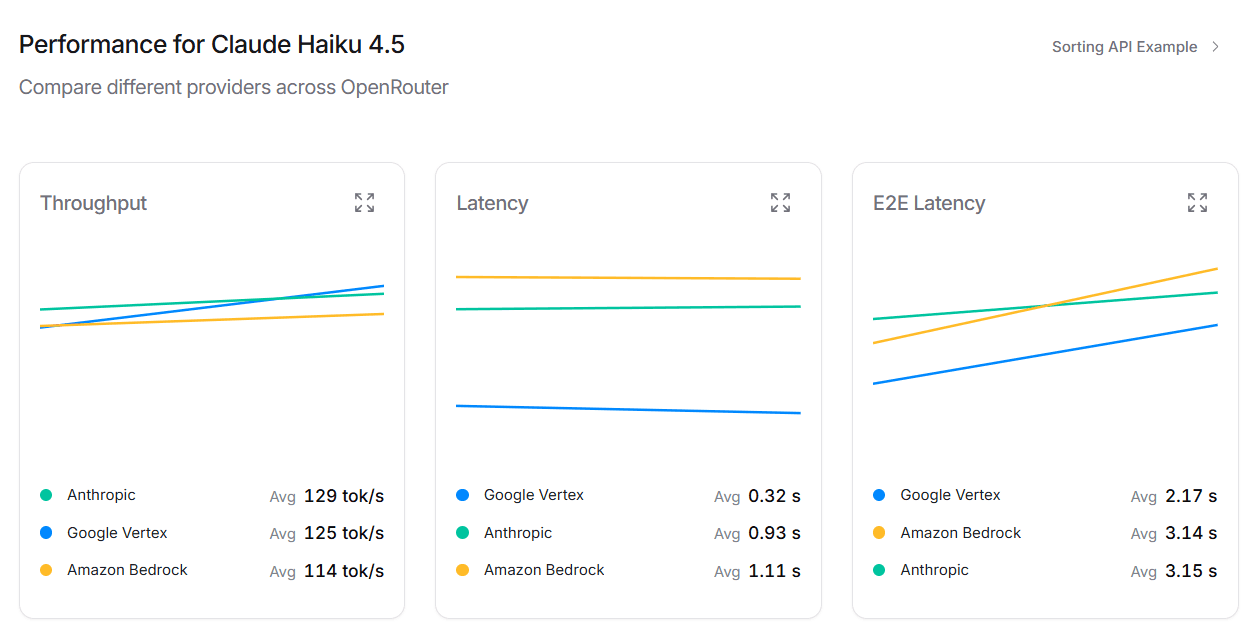

Most orgs struggle to justify large-scale deployment of frontier models due to costs. Haiku 4.5 changes the math:

Thanks to latency improvements and smarter context handling, Haiku 4.5 can:

Claude Haiku 4.5 is:

🔹 Customer Support Bot

Problem: Reps overloaded, high response times

Approach: Haiku 4.5 responds, triages, and escalates in real time

Result: Faster support, fewer handoffs, higher CSAT

🔹 Code Companion

Problem: Coding assistants lag on large files

Approach: Haiku 4.5 parses and edits at 200+ tokens/sec

Result: Snappier dev workflows, better adoption

🔹 Sales Lead Filter

Problem: Manual triage wastes time

Approach: Haiku 4.5 qualifies and replies to leads

Result: More demos, faster funnel

“What was recently at the frontier is now cheaper and faster.”

— Anthropic, Introducing Claude Haiku 4.5

India’s AI startup ecosystem is booming, but many still avoid LLMs due to cost. Claude Haiku 4.5 opens doors:

Continue exploring these related topics

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

GLM 4.7 vs MiniMax M2.1—open models that surprisingly rival Opus 4.5 vibes. We break down strengths, tradeoffs, and when to use each in production.

Meet the new ChatGPT Images model (GPT Image 1.5): generate and edit AI images up to 4× faster with sharper prompts and consistent faces/logos.