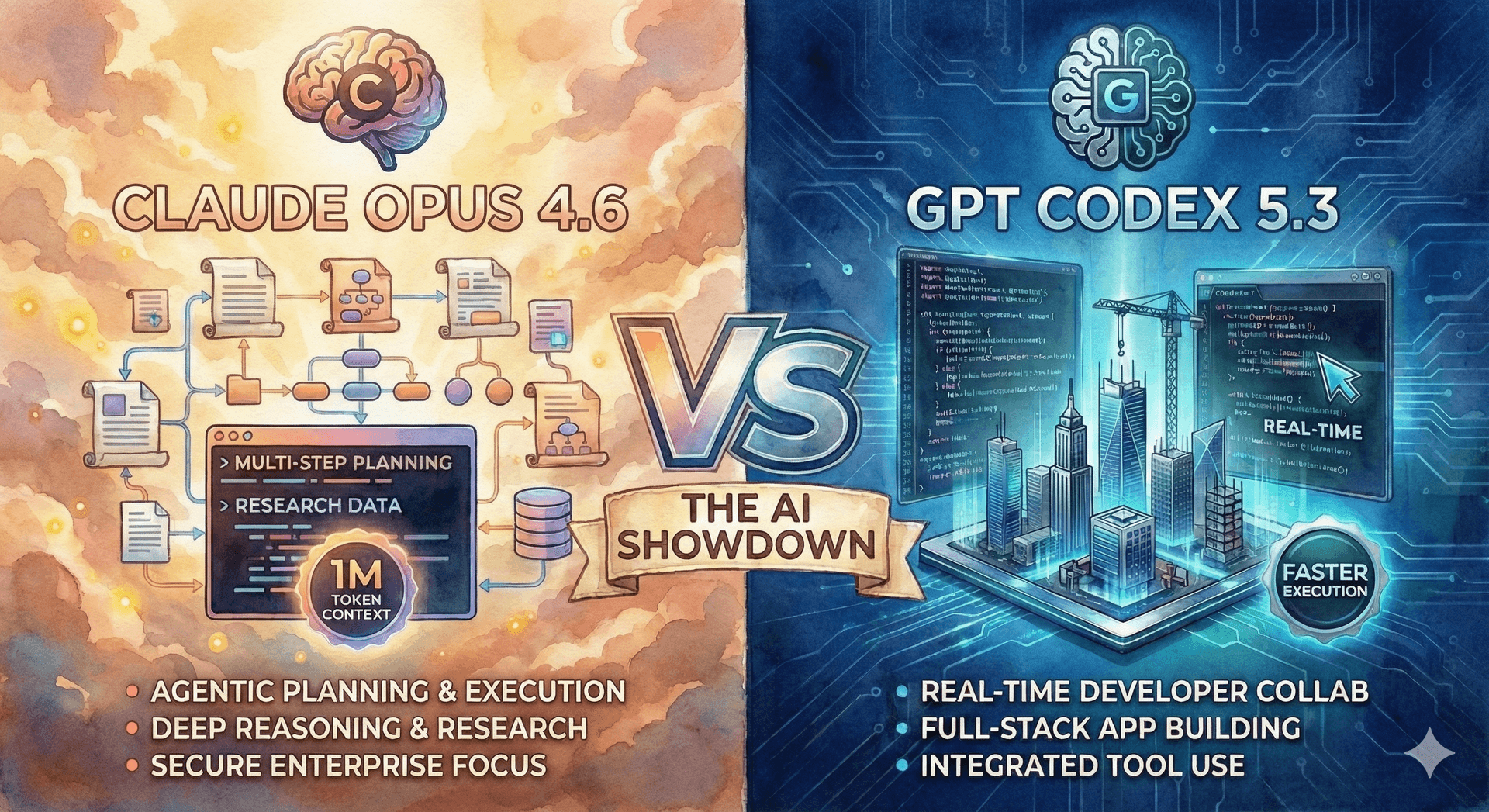

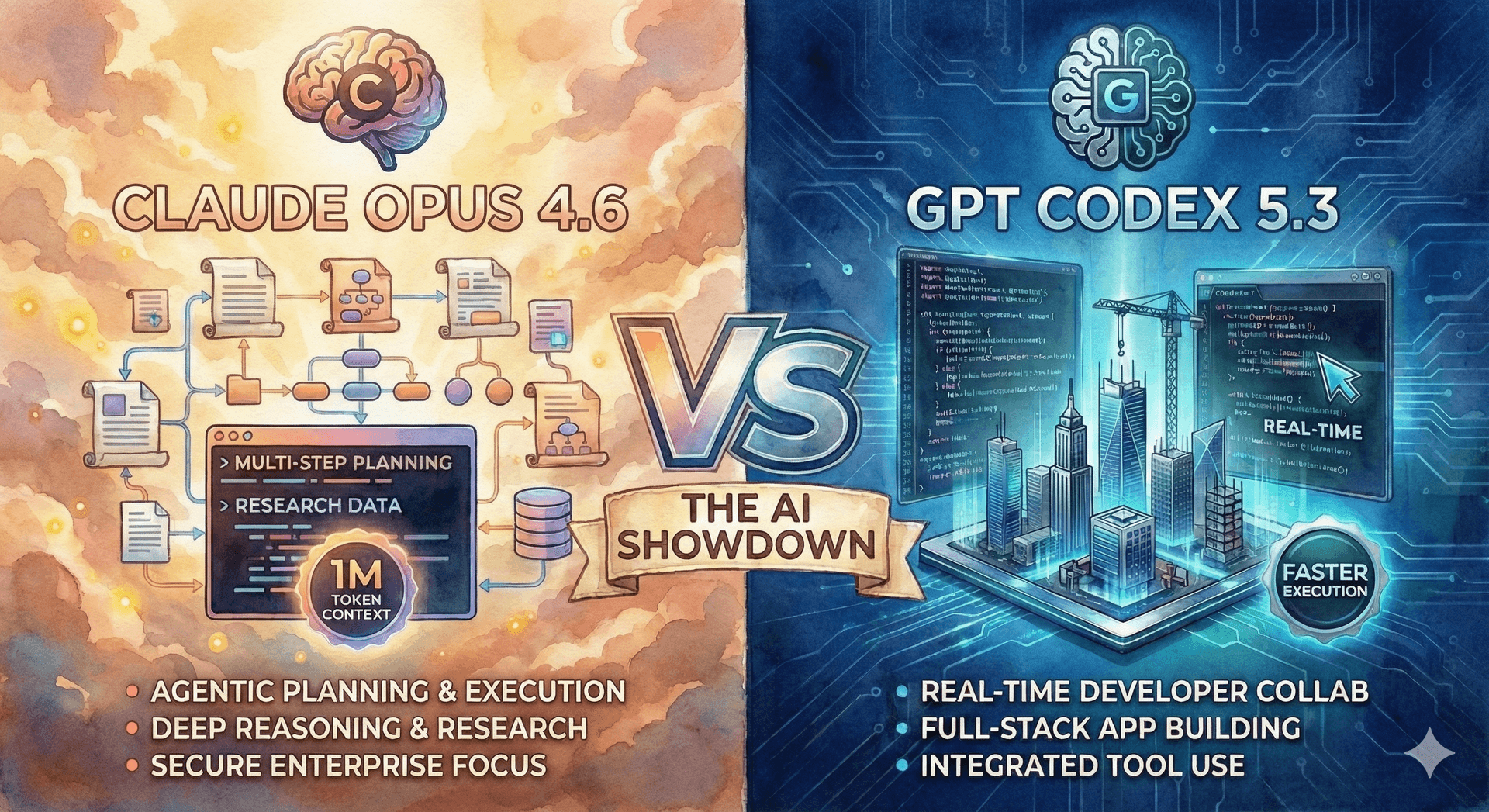

Claude Opus 4.6 vs GPT-5.3 Codex: AI Showdown ⚔️

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

SmolLM2 by Hugging Face is a groundbreaking family of compact language models delivering exceptional speed and efficiency.

In the dynamic world of AI, speed and efficiency are becoming paramount. Meet SmolLM2 from Hugging Face, a family of small language models redefining what's possible in compact AI. This isn't just another SLM; it's a speed-optimized powerhouse, proving that powerful AI can be lean, fast, and incredibly effective.

Introducing SmolLM2: Compact and Capable

SmolLM2 is a family of open-source language models designed for efficiency. Available in 135M, 360M, and 1.7B parameter sizes, these models are built to be lightweight and run fast, even on resource-constrained devices. The 1.7B variant is particularly impressive, setting a new bar for small model performance.

Blazing Speed: Efficiency Perfected

SmolLM2 prioritizes speed. Its small size allows for rapid processing, crucial for applications demanding low latency. The 135M model is a data-crunching marvel, boasting:

1200 tokens per second: Lightning-fast response times. ⚡

723MB footprint (135M model): Truly on-device ready. 🤏

Performance That Defies Size: Small Model, Big Impact

SmolLM2 punches above its weight:

Benchmark Leader: Outperforms Qwen2.5-1.5B and Llama3.2-1B. 💪

Intelligent Core: Strong instruction following, knowledge, reasoning. 🧠

Versatile Skills: Competitive in math and code. 🧮💻

The SmolLM2 Advantage: Innovation in Training

SmolLM2's secret lies in:

Massive Data Training: 11 Trillion tokens for comprehensive knowledge. 🤯

Optimized Training: Multi-stage process for peak performance. 🎓

Specialized Datasets: High-quality data for key domains. ✨

Open Source: Community-driven innovation (Apache 2.0). 🤝

Use Cases: Speed Unleashed in Real-World Applications

SmolLM2's speed and efficiency are ideal for:

Mobile AI: On-device intelligence for smartphones. 📱

Edge Computing: Real-time processing for IoT and sensors. 🌐

Resource-Limited Access: Democratizing AI in all environments. 🌍

Rapid Prototyping: Accelerating AI development. 🧪

The Future of AI is Efficient and Fast - SmolLM2 Leads the Way

SmolLM2 redefines expectations for small language models. It's fast, efficient, and surprisingly powerful, making advanced AI accessible everywhere. Experience the speed revolution and explore SmolLM2 today!

HuggingFaceTB/SmolLM2-1.7B · Hugging Face HuggingFaceTB/SmolLM2-1.7B-Instruct · Hugging Face

#AI #SmallLanguageModels #SLM #SmolLM2 #HuggingFace #FastAI #EfficientAI #MachineLearning #Speed

Continue exploring these related topics

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

Flash is open-weights and local-ready. FlashX is the hosted speed tier. Compare stats, context length, pricing, and best use-cases.

ChatGPT Health helps you understand lab results, fitness data, and wellness trends using AI—clear explanations, strong privacy, and zero late-night panic.