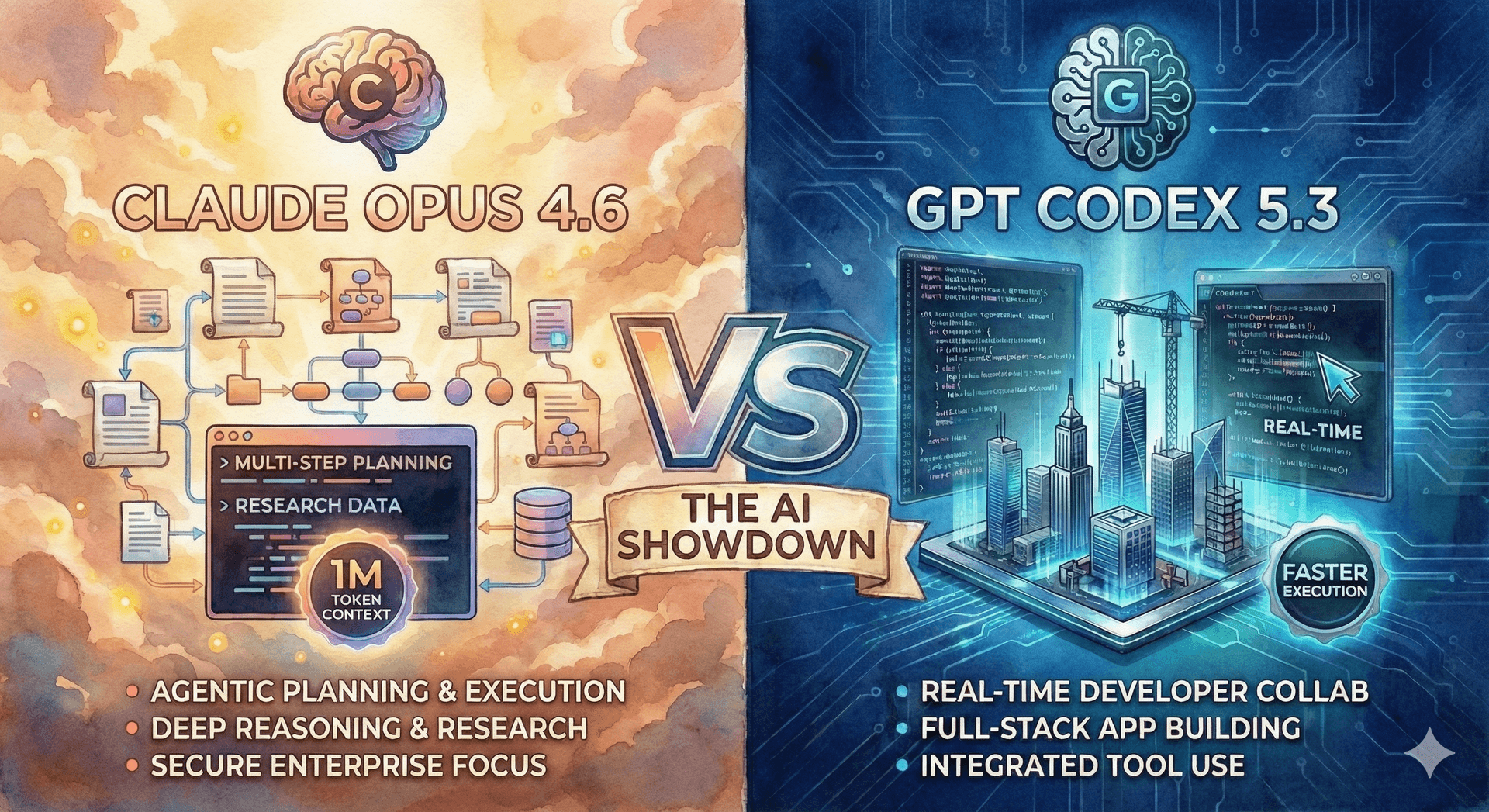

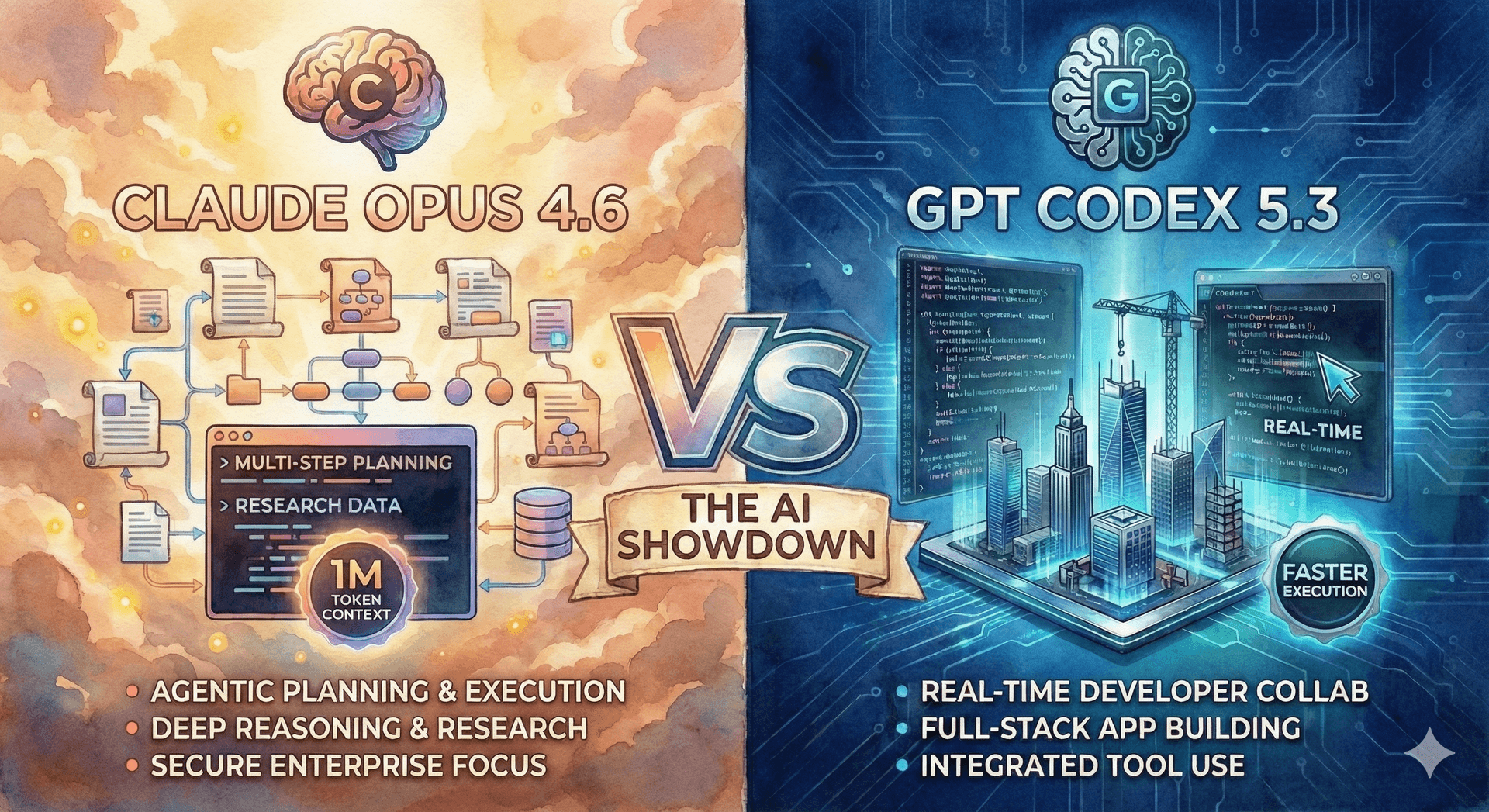

Claude Opus 4.6 vs GPT-5.3 Codex: AI Showdown ⚔️

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

Discover NVIDIA Nemotron 3 Nano—an open MoE LLM with up to 1M-token context, built for fast tool-using AI agents, RAG, and long workflows. 🚀.

If you’ve ever shipped an LLM feature, you know the lifecycle:

On Dec 15, 2025, NVIDIA dropped Nemotron 3 (sizes: Nano, Super, Ultra) an open model family built for the agent era: long-context, tool-using, multi-step workflows that don’t faceplant halfway through a task.

NVIDIA’s premise is simple:

Modern AI isn’t “one prompt → one answer.”

It’s many steps, many tools, many tokens.

Nemotron 3 is designed to keep agents:

And yes, NVIDIA says Nemotron 3 Nano is ~4× higher token throughput than Nemotron 2 Nano, and can reduce reasoning-token generation by up to 60%

Nemotron 3 Nano is available immediately; Super and Ultra are planned for the first half of 2026.

Here’s where the spicy stats start 🌶️📊:

Up to 1,000,000-token context window (yes, 1M)

MoE model: 31.6B total parameters

Pretrained on 25 trillion text tokens (including 3T+ new unique tokens over Nemotron 2)

Translation for humans: big brain available, small brain bill 🧾😅

Because a lot of agent pain comes from chunking gymnastics:

With a native 1M-token window, Nemotron 3 is explicitly targeting:

NVIDIA’s own technical blog frames this as enabling sustained reasoning across long-horizon, multi-agent workflows.

In NVIDIA’s technical report, Nemotron 3 Nano reports:

Up to 3.3× higher inference throughput vs similarly sized open models in their comparisons

On an 8K input / 16K output scenario: 2.2× faster than GPT-OSS-20B and 3.3× faster than Qwen3-30B-A3B-Thinking-2507 (in their tests)

That matters because agents don’t “answer once.” They loop:

plan → tool → read → verify → revise → repeat 🔁

So throughput isn’t a nice-to-have—it’s survival. 😅

NVIDIA describes:

Nemotron 3 Super: ~100B parameters, up to 10B active per token

Nemotron 3 Ultra: ~500B parameters, up to 50B active per token

And NVIDIA’s technical blog says Super/Ultra will add enhancements like:

NVIDIA is leaning into openness beyond “here’s weights, good luck”:

Nano report says they provide recipe, code, and most of the data used to train it

NVIDIA’s technical blog mentions a nearly 10 trillion token synthetic pretraining corpus that can be inspected/repurposed

If you want to feel Nemotron 3’s intent, don’t ask for a poem.

Try:

If it stays coherent over long context and doesn’t hallucinate tool calls like it’s improvising jazz 🎷… you’re in business.

Nemotron 3 is NVIDIA saying:

“We’re not just powering the models. We’re shipping open models designed for real agent workloads.”

And the stats back the direction: 1M context, MoE efficiency (31.6B total / ~3.2B active), and major throughput claims tuned for multi-agent systems.

Continue exploring these related topics

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

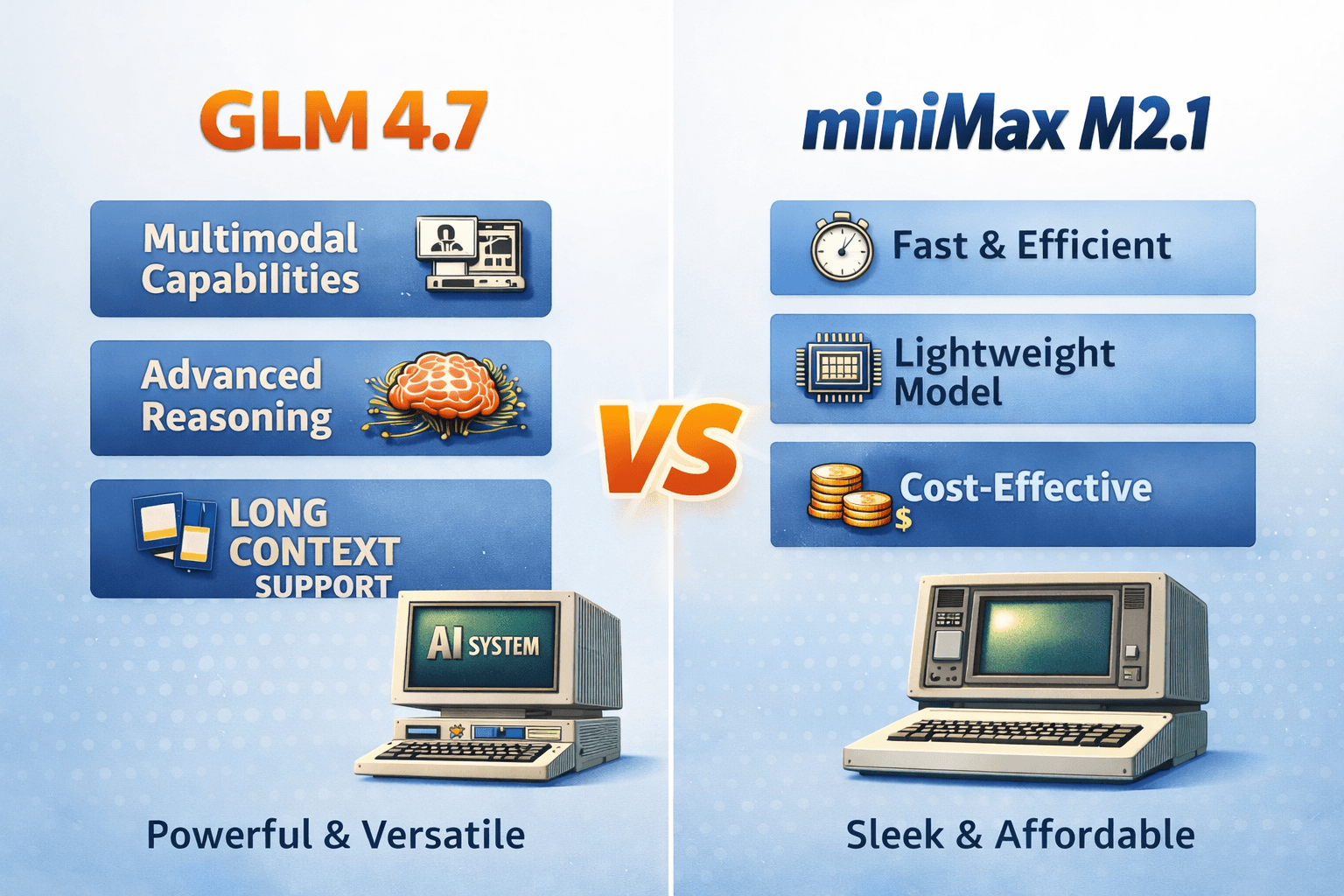

GLM 4.7 vs MiniMax M2.1—open models that surprisingly rival Opus 4.5 vibes. We break down strengths, tradeoffs, and when to use each in production.

Meet the new ChatGPT Images model (GPT Image 1.5): generate and edit AI images up to 4× faster with sharper prompts and consistent faces/logos.