Claude Opus 4.5 Is Stupidly Good for the Price 🚀

No hype, just hard numbers—benchmarks, pricing, and real-world tests that show how Claude Opus 4.5 delivers top-tier performance at a much lower cost.

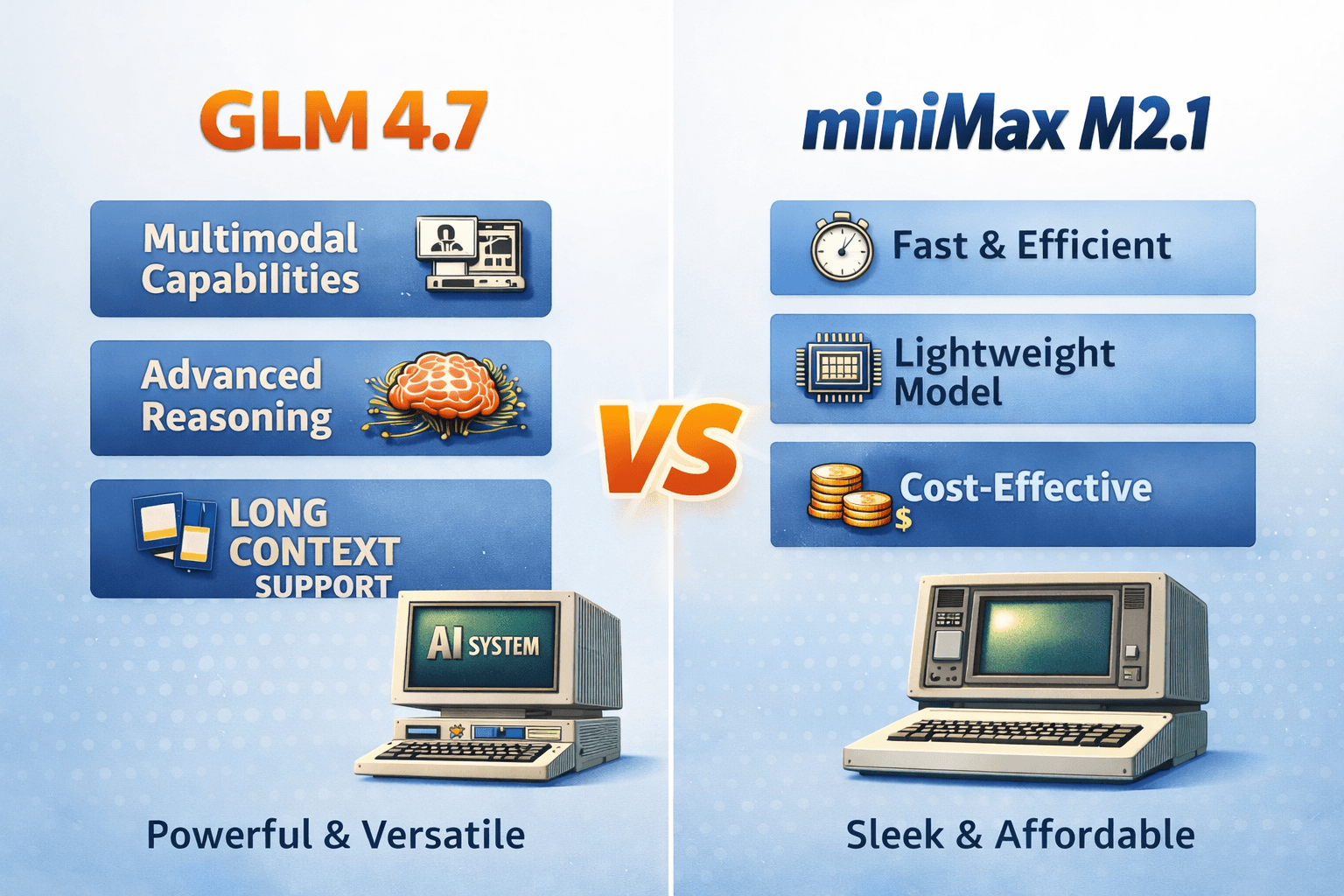

GLM 4.7 vs MiniMax M2.1—open models that surprisingly rival Opus 4.5 vibes. We break down strengths, tradeoffs, and when to use each in production.

Let’s be real: We’ve all stared at our Claude Opus 4.5 bill and wondered if we should start selling plasma to keep our coding agents running. Anthropic’s flagship is a genius, but it’s a genius that charges like a high-end Beverly Hills lawyer. Enter the challengers: GLM-4.7 and MiniMax-M2.1, two open-weight powerhouses designed to punch the proprietary monopoly right in its closed-source teeth. These models have effectively commoditized "Opus-level" intelligence for pennies on the dollar.

GLM-4.7: The Strategist with a Photographic Memory 🧠 GLM-4.7 (from Z.ai) is the model that never forgets why it made a decision ten turns ago. Its killer feature, Preserved Thinking, allows it to retain "thought blocks" across an entire conversation, solving the dreaded "lazy dev" problem where AI performance degrades during long, complex sessions. With 355 billion total parameters (and 32B active), it is a massive beast optimized for multi-file, agentic coding.

MiniMax-M2.1: The 10B Speed Demon 🏎️ MiniMax is "born for agents" and redefines efficiency. Despite having 230 billion total parameters, it only activates 10 billion at a time, making it a lightning-bolt operator for compile-run-test loops. It excels at "messy reality"—like cleaning a corrupted CSV file with engineer-like fallback logic while other models are still throwing errors.

The open-source community is no longer just "trailing behind"; in some cases, it's actually beating the king.

The Vibe: GLM-4.7 is a math and coding wizard, actually beating Opus 4.5 on the brutal AIME 2025 benchmark. MiniMax-M2.1 is the efficiency king, delivering nearly 5x the throughput of GLM and handling massive 131K token responses in a single shot

This is where the revolution gets hilarious: using Claude Opus 4.5 via API is roughly 20x more expensive than using MiniMax-M2.1 for the same output.

The UI / Subscription Battle

• Claude: Individual users pay $20/month for Pro or from $100/month for the Max plan.

• GLM-4.7: Z.ai offers a Coding Plan for just $3/month, which gives you 3x the quota of Claude and works directly in tools like Claude Code and Cline. ☕

• MiniMax-M2.1: While they have tiers from $10 to $50/mo, the MiniMax Agent is currently FREE for a limited time

• GLM-4.7 is the King of "Vibe Coding". In tests, it generated a functional Minecraft clone, a 3D racing game, and modern UI layouts with almost no hand-holding. It even boasts 91% PPT compatibility, making it the only AI that makes slides look professional instead of like a 1995 PowerPoint template.

• MiniMax-M2.1 is the Ultimate Colleague. It feels more like a teammate who understands requirements and is willing to "just get things done". It excels at long-horizon toolchains across shells and browsers, gracefully recovering from flaky steps during complex tasks

• Choose GLM-4.7 if you are a Vibe Coder who wants beautiful UIs, deep reasoning for complex debugging, and a $3/month subscription that won't make your bank account cry.

• Choose MiniMax-M2.1 if you are building autonomous agents that need to move at warp speed, process massive 131K responses, and handle bulk data for pennies.

• Stick with Claude Opus 4.5 only if you need the absolute pinnacle of creative nuance and you're rich enough to never check your API dashboard.

The Bottom Line: The "Proprietary Tax" is now officially optional. Open weights are no longer just the "cheap alternative"—they are the new standard.

Continue exploring these related topics

No hype, just hard numbers—benchmarks, pricing, and real-world tests that show how Claude Opus 4.5 delivers top-tier performance at a much lower cost.

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

Meet the new ChatGPT Images model (GPT Image 1.5): generate and edit AI images up to 4× faster with sharper prompts and consistent faces/logos.