Claude Haiku 4.5: Small Model, Big AI Leap

Claude Haiku 4.5 offers near-frontier AI performance at double the speed and one-third the cost. Discover how it changes the game.

Gemini 3 Flash is Google’s fastest frontier AI model yet, combining pro-level reasoning with ultra-low latency and lower cost.

If you’ve ever asked an AI a simple question and it responded like it was filing taxes in slow motion… yeah. Same. 😅

Gemini 3 Flash is Google’s latest model with “frontier intelligence built for speed,” aiming to deliver Pro-grade reasoning with Flash-level latency, efficiency, and cost.

Straight from Google’s announcement:

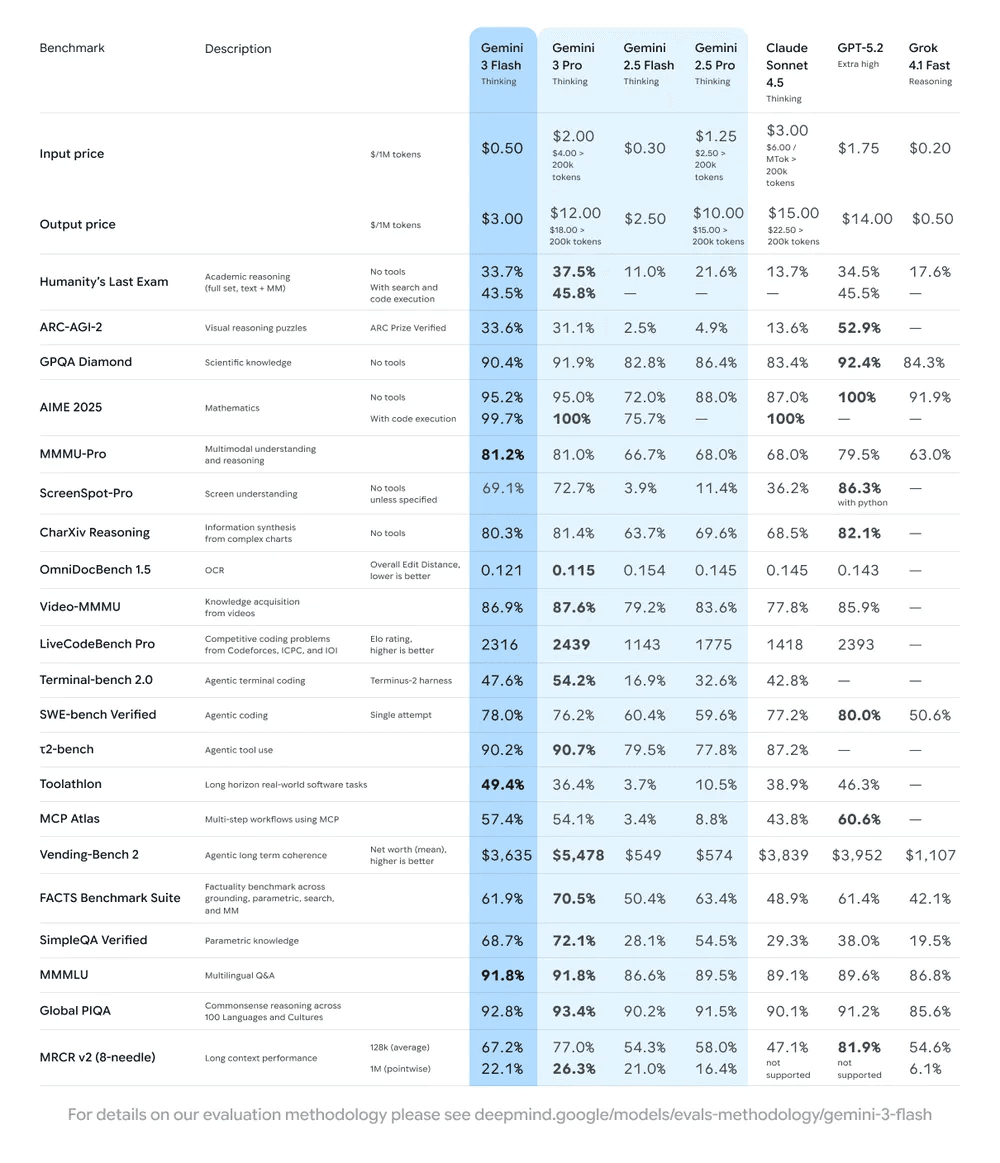

Benchmarks:

Efficiency: ~30% fewer tokens on average than Gemini 2.5 Pro (measured on typical traffic) while completing everyday tasks with higher performance.

Speed: 3× faster than Gemini 2.5 Pro (based on Artificial Analysis benchmarking, per Google).

Coding agent benchmark: SWE-bench Verified: 78%.

Pricing (Gemini API / Vertex AI): $0.50 / 1M input tokens, $3 / 1M output tokens, and audio input $1 / 1M input tokens.

Translation: fast, smart, and less likely to eat your token budget like it’s an all-you-can-eat buffet 🍽️😄

Google positions Flash as proof that you can push the quality vs cost vs speed “Pareto frontier” without turning the model into a confused toaster. 🔥🍞

They also describe Flash as being able to modulate how much it “thinks” at the highest thinking level—spending more compute on harder tasks, and staying lean on simpler ones.

And yes, there’s also a Gemini 3 Flash Lite mentioned in their Pareto frontier chart context—basically a “go even cheaper/faster” sibling vibe.

Google frames Gemini 3 Flash as made for iterative development—fast loops where you prompt, test, tweak, ship, repeat (and pretend your first version was always the plan). 😄

They specifically call out:

Strong agentic coding capability (SWE-bench Verified 78%, outperforming Gemini 3 Pro in that benchmark per the post).

Fit for complex video analysis, data extraction, and visual Q&A—especially in apps that need quick answers and deep reasoning.

Demo-style examples like near real-time in-game assistance, A/B testing UI spinner designs, image captioning with contextual overlays, and generating multiple design variations from a single prompt.

Also: Google says companies like JetBrains, Bridgewater Associates, and Figma are already using it, and it’s available to enterprises via Vertex AI and Gemini Enterprise.

Google says Gemini 3 Flash is now the default model in the Gemini app (replacing 2.5 Flash), giving users the Gemini 3 experience at no cost.

They highlight practical multimodal examples like:

Google says Gemini 3 Flash is starting to roll out as the default model for AI Mode in Search, focusing on nuanced question parsing and giving “visually digestible” responses that can pull real-time local information and helpful links from across the web—useful for things like last-minute trip planning.

Google says Gemini 3 Flash is available in preview via the Gemini API (Google AI Studio), Google Antigravity, Vertex AI, and Gemini Enterprise, plus tools like Gemini CLI and Android Studio—and it’s rolling out in the Gemini app and AI Mode in Search.

Continue exploring these related topics

Claude Haiku 4.5 offers near-frontier AI performance at double the speed and one-third the cost. Discover how it changes the game.

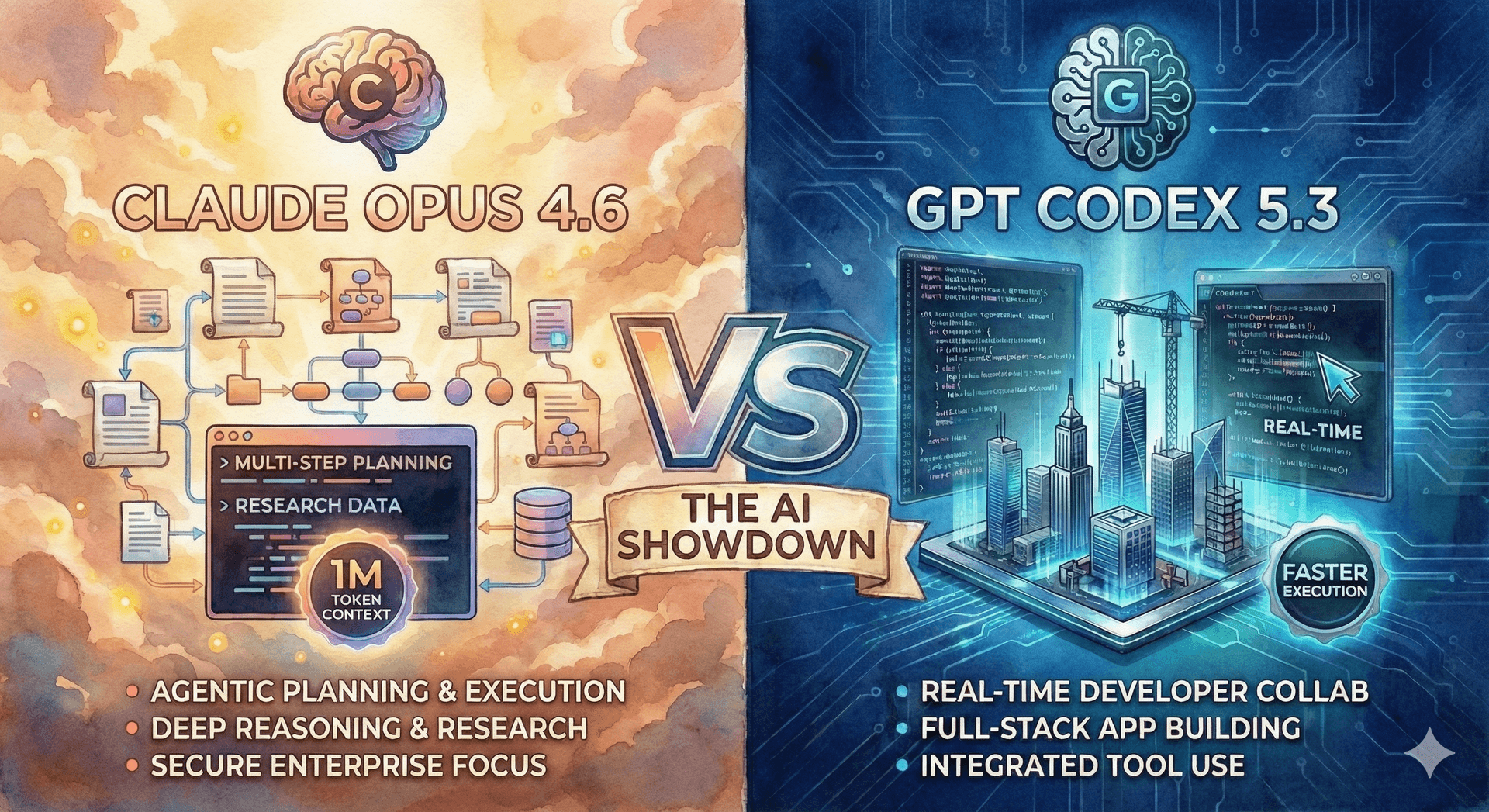

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

Flash is open-weights and local-ready. FlashX is the hosted speed tier. Compare stats, context length, pricing, and best use-cases.