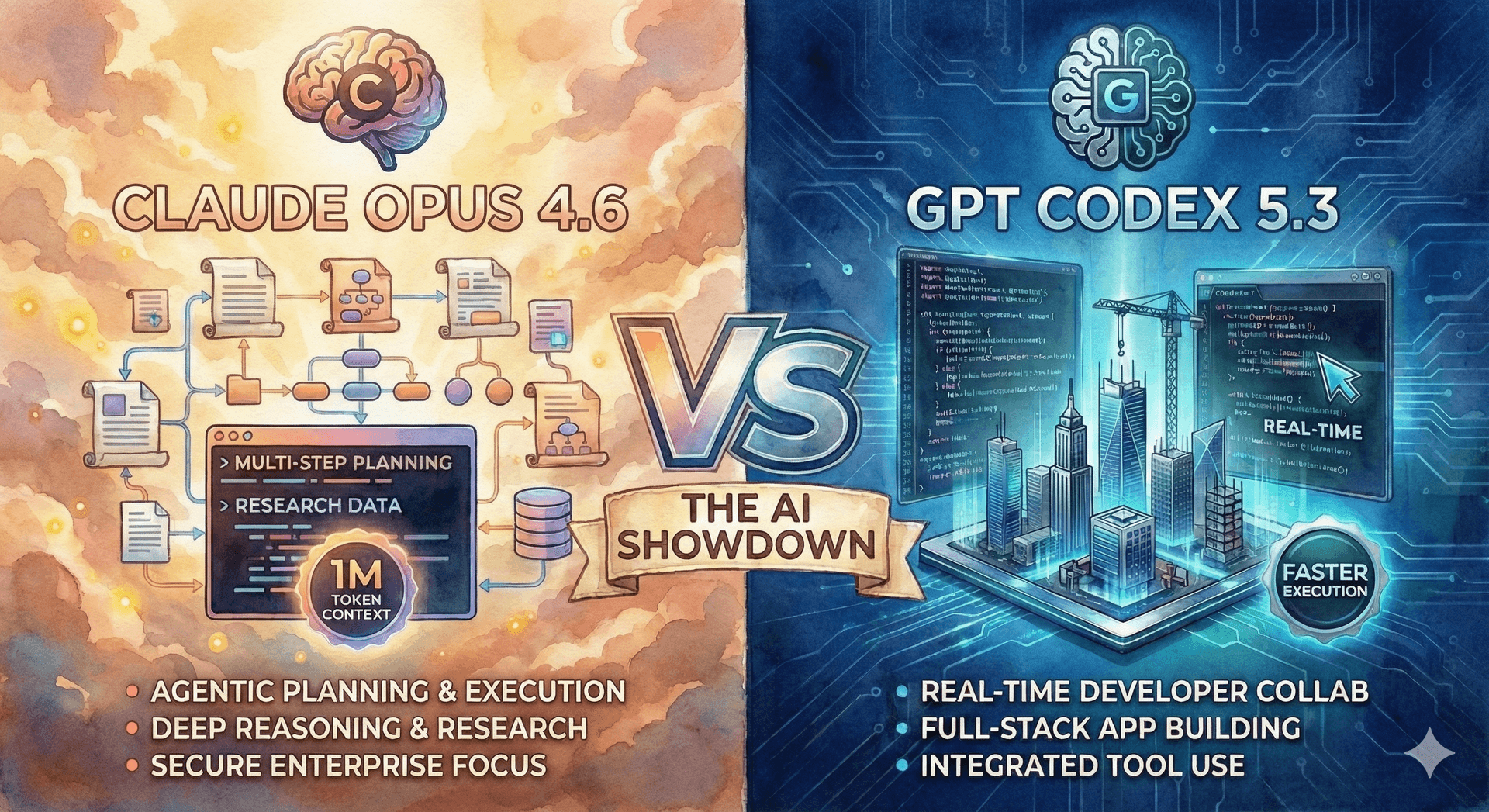

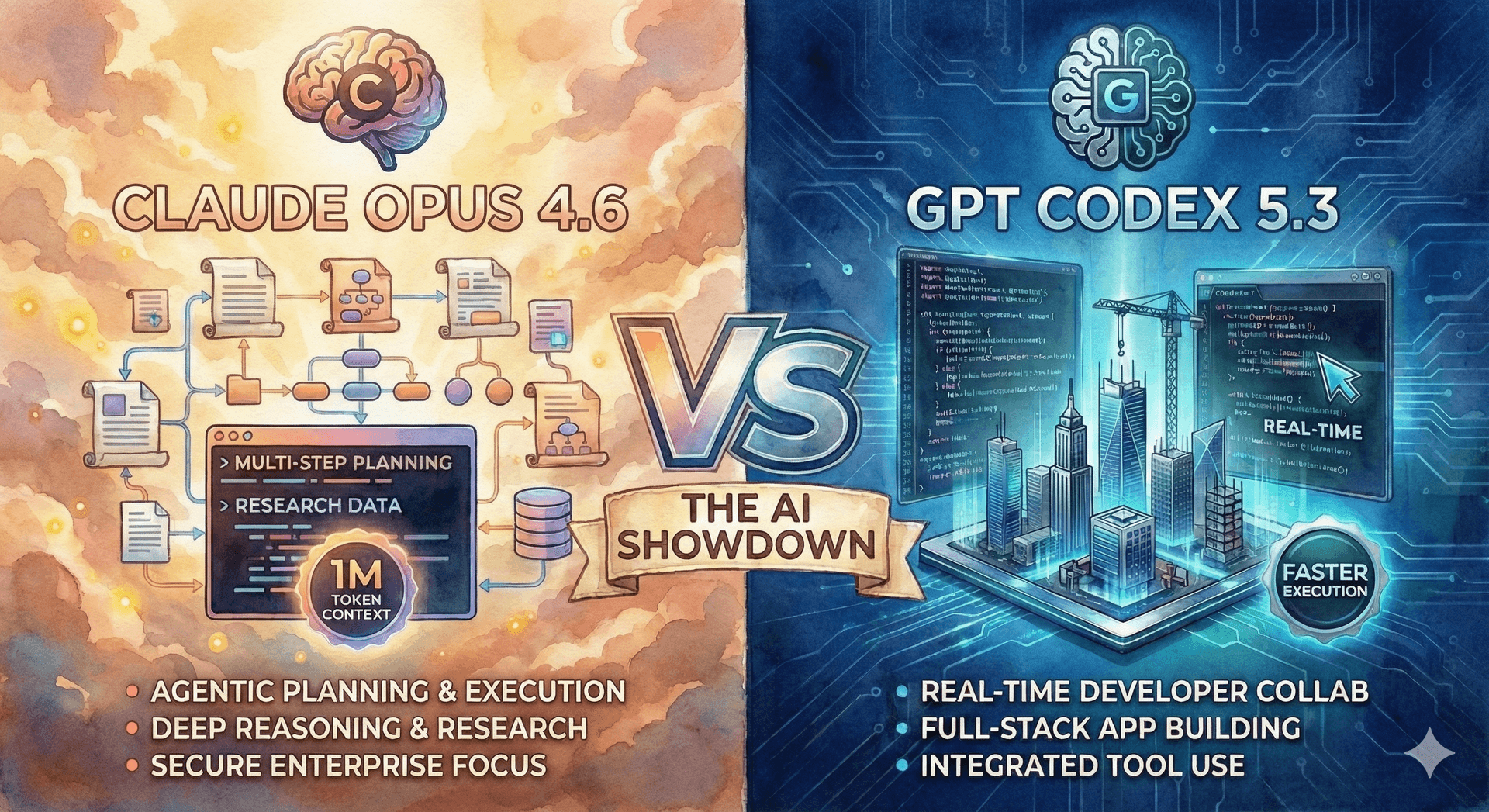

Claude Opus 4.6 vs GPT-5.3 Codex: AI Showdown ⚔️

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

Meta has launched Llama 4, its latest open-weight AI suite, featuring multimodality with models like Llama 4 Scout, Llama 4 Behemoth and Llama 4 Maverick.

The Llama 4 series includes three models :

Llama 4 Scout: Efficient, runs on a single GPU, with a 10 million token context window, excelling in long-context tasks.

Llama 4 Maverick: Flagship model with strong coding support, a 1 million token context window, and excellent image and text understanding across 12 languages.

Llama 4 Behemoth: A powerful teacher model (288 billion parameters) currently in training, outperforming models like GPT-4.5 on STEM benchmarks.

What the Benchmarks says:

The Mixture-of-Experts (MoE) architecture allows these models to achieve high performance with fewer active parameters.

Llama 4 offers several key advancements :

Native Multimodality: Understands and processes text and images together.

Mixture-of-Experts (MoE) Architecture: Enhances efficiency by activating only a fraction of parameters.

Massive Context Window: Up to 10 million tokens in the Scout model.

Enhanced Multilingual Understanding: Trained on over 30 trillion tokens across 200 languages.

Advanced Reasoning and Coding Skills: Maverick shows strong coding performance, rivaling DeepSeek v3.1 in some evaluations.

Llama 4 demonstrates significant progress over its predecessors, Llama 2 and 3, in several key areas, including multimodality and context window size. Benchmarks show Llama 4 Scout outperforming models like Gemma 3 and Mistral 3.1. Llama 4 Maverick has shown comparable performance to DeepSeek v3.1 in reasoning and coding, even surpassing GPT-4o and Gemini 2.0 Flash in certain evaluations . The experimental chat version of Maverick achieved a notable ELO score on the LMArena benchmark. Furthermore, Llama 4 Behemoth has outperformed top-tier models like GPT-4.5 on STEM benchmarks. Performance benchmarks for different hardware configurations are available, showcasing the efficiency of Llama 4 .

Here is the link to find the llama 4 models:

https://huggingface.co/collections/meta-llama/llama-4-67f0c30d9fe03840bc9d0164**

Continue exploring these related topics

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

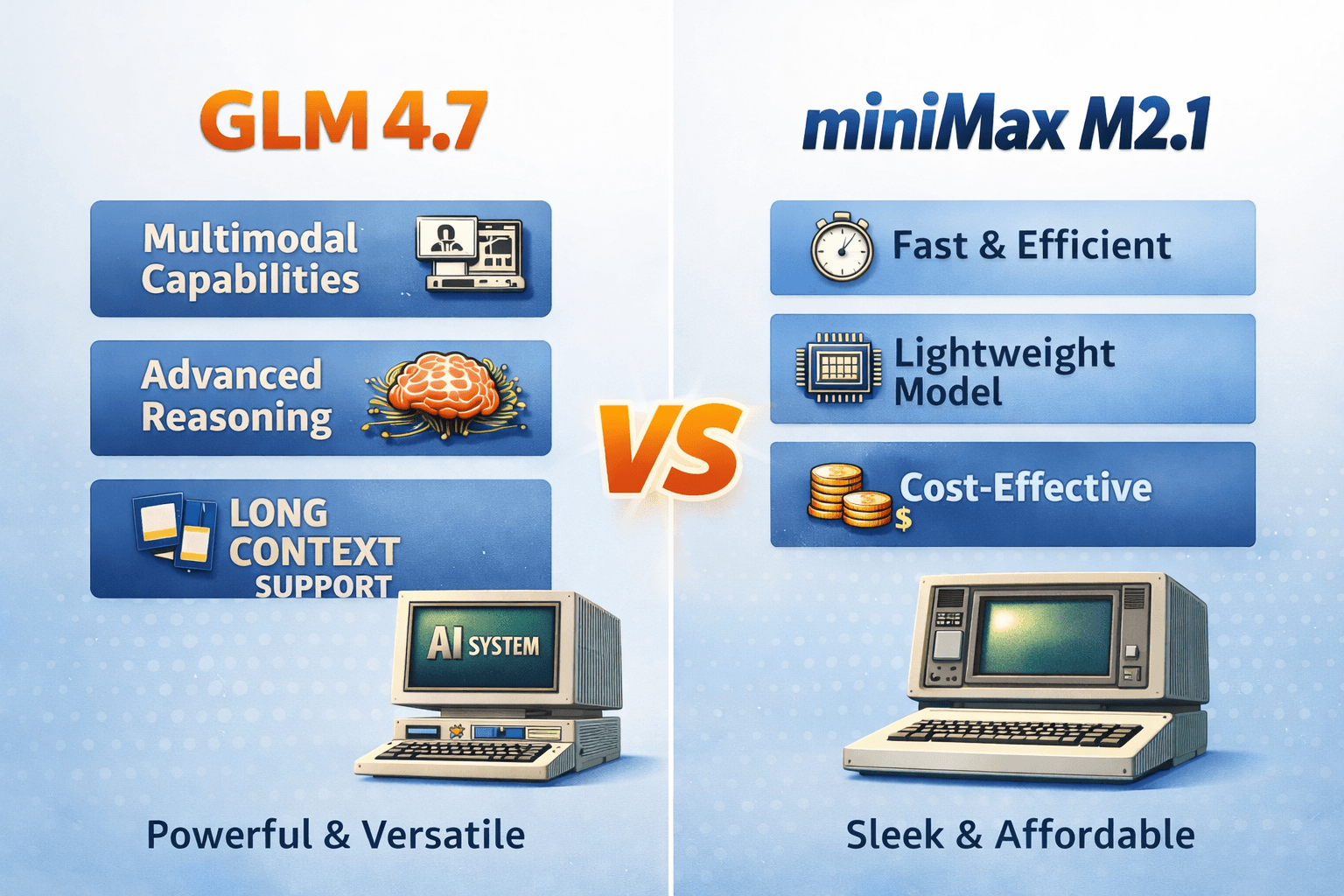

GLM 4.7 vs MiniMax M2.1—open models that surprisingly rival Opus 4.5 vibes. We break down strengths, tradeoffs, and when to use each in production.

Meet the new ChatGPT Images model (GPT Image 1.5): generate and edit AI images up to 4× faster with sharper prompts and consistent faces/logos.