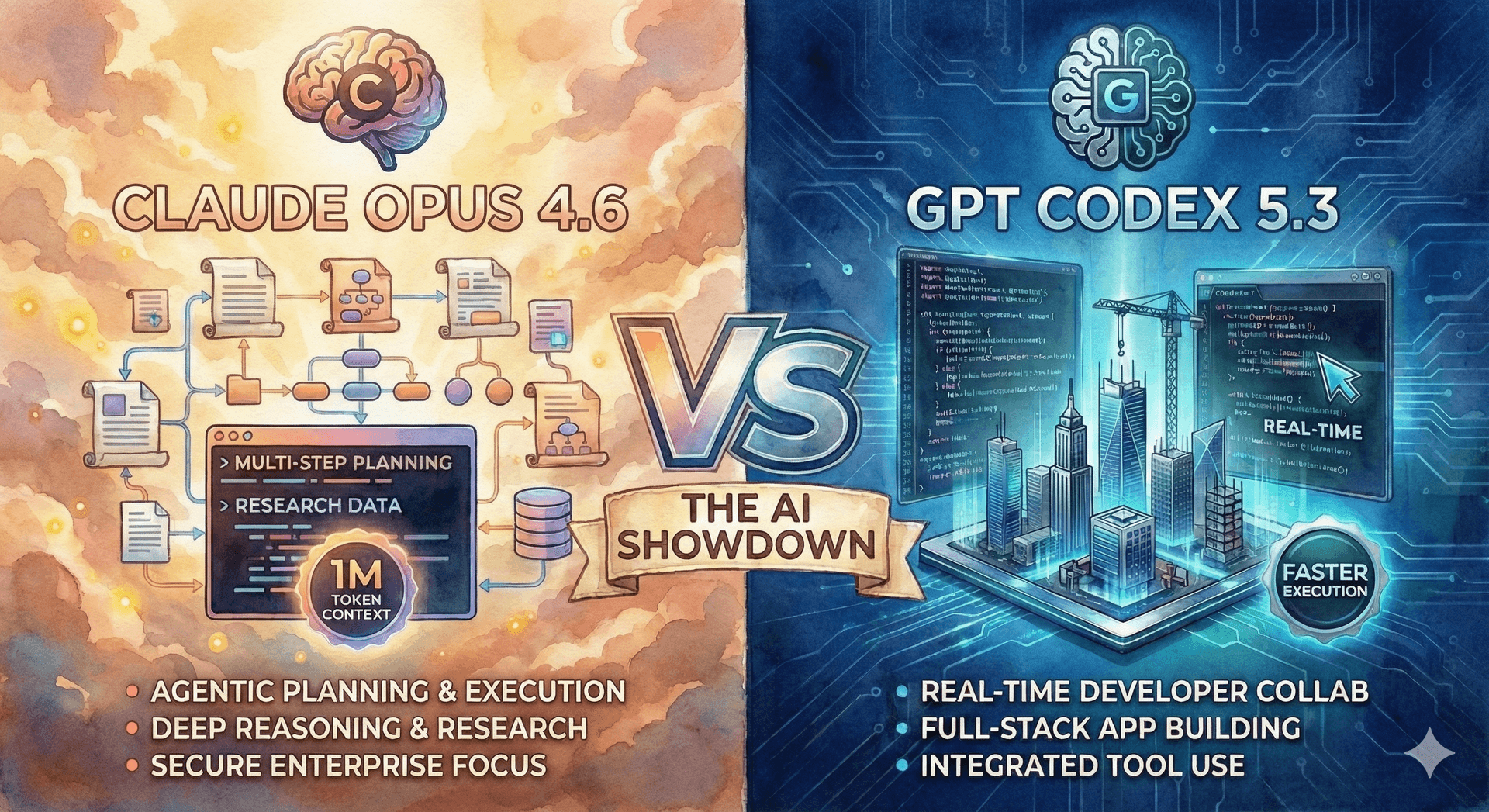

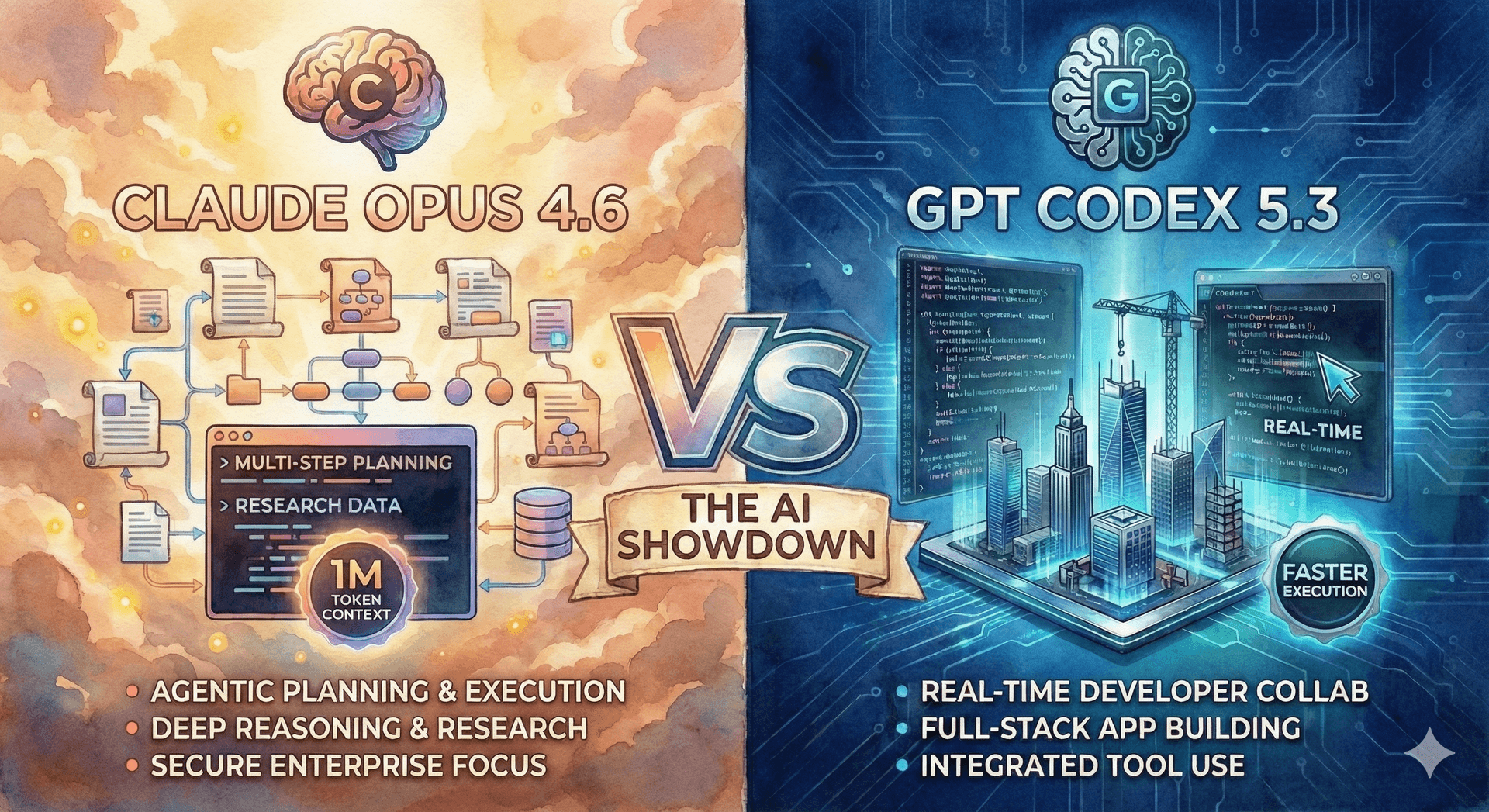

Claude Opus 4.6 vs GPT-5.3 Codex: AI Showdown ⚔️

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

Pruna AI's groundbreaking open-source framework democratizes AI model optimization, empowering developers to cut costs, and accelerate innovation.

In the ever-evolving landscape of artificial intelligence, efficiency is king. Pruna AI, a forward-thinking European startup, has just made a monumental move by open-sourcing its AI model optimization framework. This isn't just another open-source release; it's a potential game-changer for developers seeking to streamline and enhance their AI applications.

Why This Matters:

Democratizing AI Efficiency:

For too long, cutting-edge model compression techniques were the exclusive domain of tech giants with vast resources. Pruna AI's initiative levels the playing field, granting developers of all sizes access to powerful optimization tools.

This means smaller startups and individual creators can now build more efficient, cost-effective, and scalable AI solutions.

A Unified Optimization Toolkit:

Pruna AI's framework consolidates various optimization methods—caching, pruning, quantization, and distillation—into a single, easy-to-use platform.

This eliminates the need for developers to piece together disparate tools, saving valuable time and resources.

Real-World Impact:

The framework is designed to deliver tangible results, reducing computational requirements and lowering inference costs.

Imagine AI models that are significantly smaller and faster, without sacrificing quality. Pruna AI has demonstrated this, with examples of Llama models being reduced by 8 times their original size.

Setting a New Standard:

Pruna AI aspires to become the "Hugging Face" of AI efficiency, establishing a standardized approach to model optimization.

This could lead to greater interoperability and collaboration within the AI community.

Diving Deeper into the Framework:

Comprehensive Optimization Techniques:

The framework integrates a suite of advanced techniques, allowing developers to fine-tune their models for optimal performance.

Pruning: like trimming unneccesary branches of a tree, to remove unneeded parts of the model.

Quantization: Reducing the precission of the models numerical values, reducing the models size.

Distillation: Using a larger "teacher" model, to train a smaller "student" model, to mimic it's behavior.

Focus on Practicality:

Pruna AI's emphasis on evaluating trade-offs between model size, speed, and accuracy empowers developers to make informed decisions.

This ensures that optimized models not only perform well but also meet specific application requirements.

The Future of AI Optimization:

Pruna AI's open-source framework marks a significant step towards a more efficient and accessible AI future. As AI continues to permeate various industries, the need for optimized models will only grow. Pruna AI is positioning itself at the forefront of this movement, empowering developers to build the next generation of AI applications.Here is the website link:https://www.pruna.ai/

Continue exploring these related topics

Opus 4.6 and GPT-5.3 Codex are redefining AI specialization. Compare writing, coding, reasoning, and performance to choose the right model for your workflow.

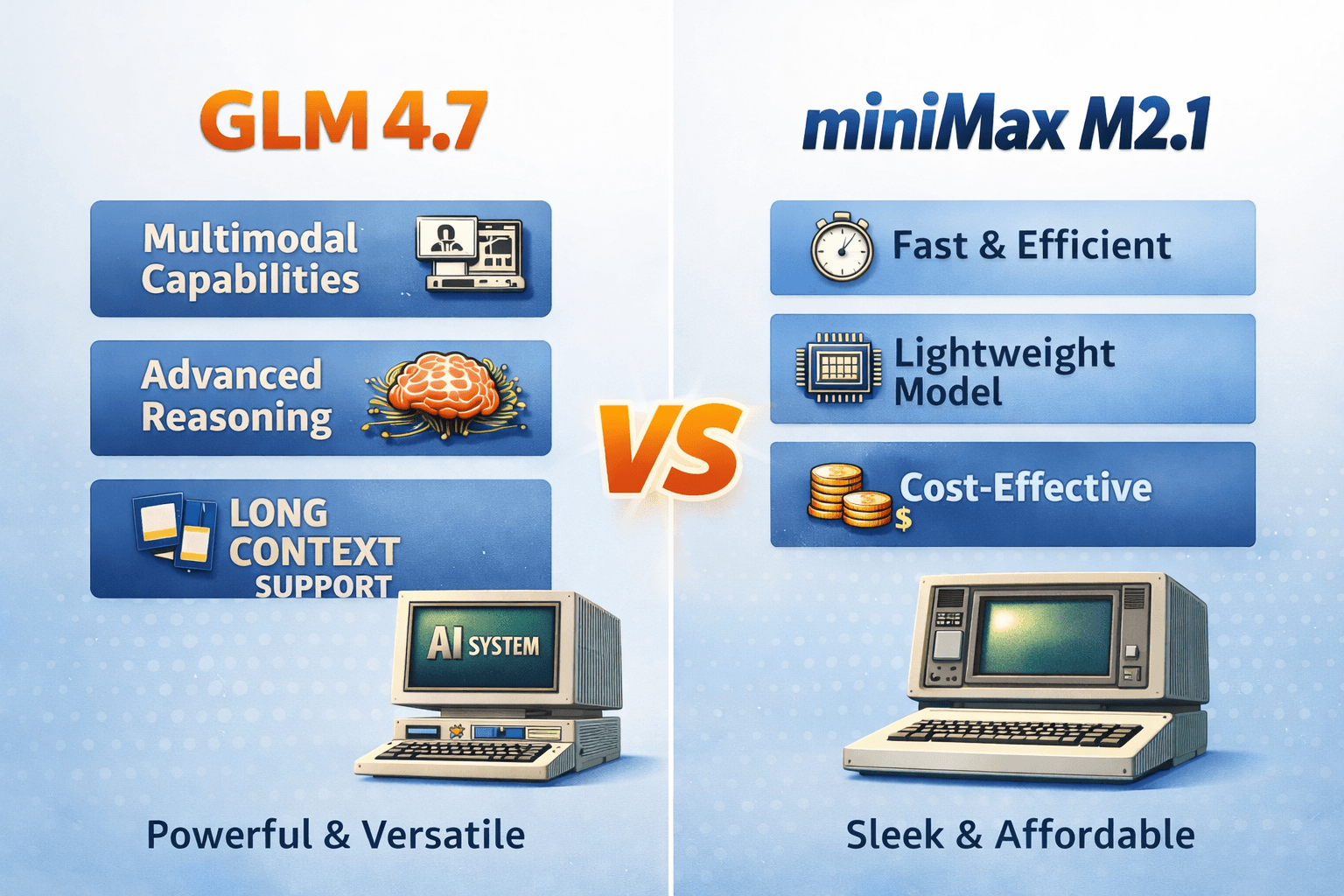

GLM 4.7 vs MiniMax M2.1—open models that surprisingly rival Opus 4.5 vibes. We break down strengths, tradeoffs, and when to use each in production.

Meet the new ChatGPT Images model (GPT Image 1.5): generate and edit AI images up to 4× faster with sharper prompts and consistent faces/logos.